When Deep Blue won a game against chess great Garry Kasparov, everyone wondered if human intelligence had finally met its match.

◊

Philadelphia, 1996. The Liberty Bell, cheesesteaks, and . . . chess?

That’s right, chess; for it was in Philly that year that grandmaster and world chess champion, Garry Kasparov, saved the world from an imminent, dystopian future of subservience to supercomputers. Well, not really, but for many it felt that way. After all, IBM’s Deep Blue stunned everyone by beating Kasparov in the first game. Ultimately, however, Kasparov won the match by defeating Deep Blue four to two in a traditional chess format governed by tournament regulations and classic time controls – and humanity breathed a collective sigh of relief and gratitude.

The win shouldn’t have been too much of a surprise. After all, back in 1989, Kasparov had easily dispatched an experimental version of the chess-playing program – then called Deep Thought – in an exhibition game. At the time, Kasparov quipped that Deep Thought’s programmers should “teach it to resign earlier.” Still, the extraordinary fact that, less than a decade later, the IBM program won even a single game against the master sent shockwaves through the chess community and beyond.

An ancient Italian town reunites after a pandemic pause to breathe new life into a grand chess tradition. “The City of Human Chess” is a must-watch for every chess fan.

So, by 1996, it seemed as if at least one computer was smart or intelligent or could do something like what we call thinking. In other words, it seemed as if the prediction of mathematician, WWII codebreaker, and computer science theorist Alan Turing had finally come to pass: Machines could imitate humans well enough to warrant our belief in their intelligence.

The term, “artificial intelligence” (AI) was coined in the 1950s. John McCarthy, among the earliest AI pioneers, defined AI as "the science and engineering of making intelligent machines". Today, AI has evolved to include machine learning such as neural networks, the structures and processes of which mimic the human brain’s neural signaling.

Imitation Game

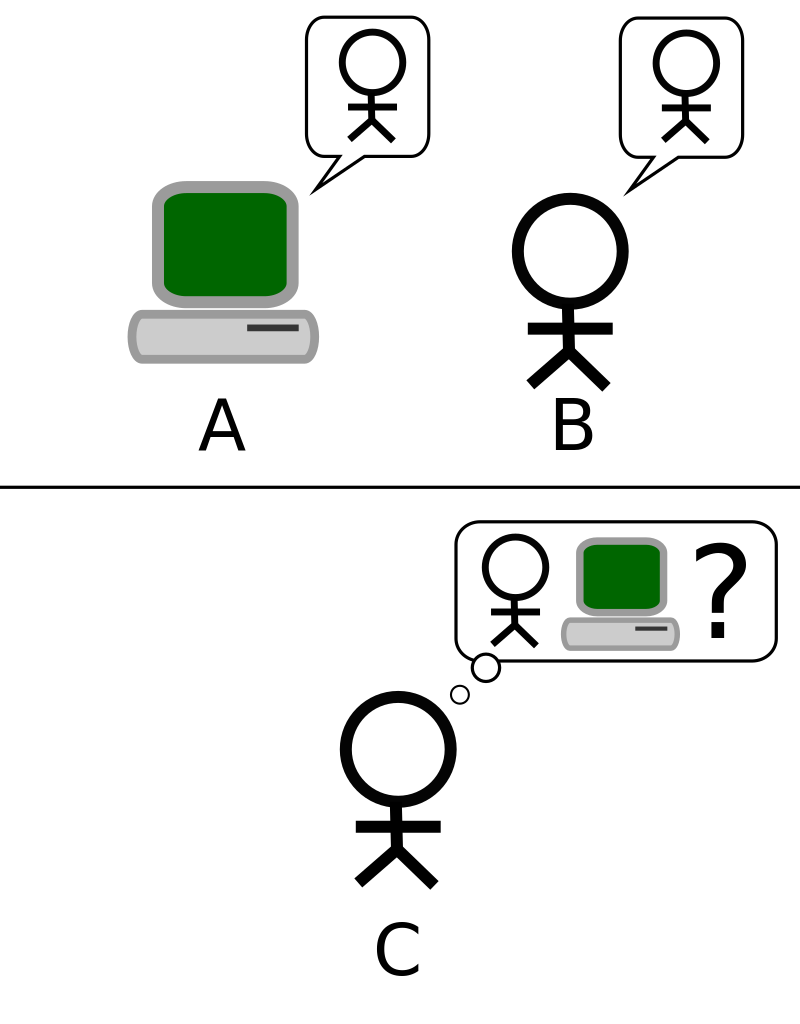

In his 1950 paper “Computing Machinery and Intelligence,” Turing had proposed a version of a popular Victorian parlor game, in which one person tries to figure out which of two other people is a woman by asking a series of questions. The questioner is in one room, while a woman and a man are in two other rooms. The man’s job is to throw off the interrogator by giving misleading answers to questions.

The “standard interpretation” of the Turing Test (Credit: Hugo Férée, via Wikimedia Commons)

In Turing’s version of the Imitation Game, a computer replaces the deceptive man. Now the object of the game is for the computer to fool the interrogator into believing it is a person. Turing theorized that, “at the end of the century . . . one will be able to speak of machines thinking without expecting to be contradicted.”

Alan Turing’s fascinating and tragic life is the subject of MagellanTV’s Codebreaker: Alan Turing – Persecution of a Genius. Watch it here.

Though it ultimately lost the 1996 match against Kasparov, when Deep Blue won the opening game of the series, a lot of humans sat back on their haunches and wondered what was next. Did that achievement mean Deep Blue was “thinking”?

By all accounts, Deep Blue’s initial success in 1996 was just a matter of brute-force processing. Still, Deep Blue had game. After 37 moves, Kasparov resigned, ending the first game of the match. Still, Kasparov came back to win the match and declared that Deep Blue was not invincible. But human hubris about our own intellectual dominance had arguably been exposed.

IBM’s Deep Blue (Credit: Christina Xu, via Wikimedia Commons)

There is No Ghost, Only the Machine

After IBM computer scientists upgraded Deep Blue, the second match was set for 1997. Once again, the world held its breath, and kept holding it for six games, until Kasparov resigned. Deep Blue was victorious, and for the first time in his life, the 34-year-old Garry Kasparov had lost a chess match. And he lost it to a computer.

Kasparov had previously lost to computer programs, but they were speed competitions, and as such aren’t considered real tests of skill. Skill involves much more than anticipating hundreds of millions of possible moves in a second.

After the shocking upset, people had…thoughts. Some, for example, believed that Deep Blue’s win suggested it was smarter than a very smart human. And, in a restricted way, that was true. After all, the young AI had more brute processing power than its human counterpart. By 1997, Deep Blue could process up to 200 million moves per second. Humans can’t do that. Perhaps this fact is included in the mix of current worries about an AI-ruled dystopian future.

.jpg)

Garry Kasparov at the 1982 Chess Olympiad (Credit: Krtschil, via Wikimedia Commons)

Deep Blue also had some important advantages. It didn’t get tired, or frustrated, or worried. It didn’t have an ego, let alone hubris. It merely processed . . . and processed and processed. It predicted myriad branching paths from multiple possible moves in a fraction of the time it takes even the best chess players to consider.

Deep Blue is, after all, made up of circuit boards and silicon, and humans are flesh and blood, which means that thinking is radically different for us than for the computer – assuming Deep Blue thinks at all. Moreover, chess-playing algorithms perform one activity – however excellent that performance is – but human beings are capable of intelligence and creativity in a wide range of activities. Maybe Deep Blue isn’t such a smarty pants, after all.

The Logic of Chess

These days, chess apps abound. Whether you’re a beginner, grand master, or somewhere in between, you can find a program against which you can test your skills. What you and the program share is formal logic. More specifically, you share fundamental reasoning structures. No surprise there, since human beings write the algorithms that define a digital chess player’s “mind.”

Consider the following logically correct reasoning: A or B. Not A. So, B. So, the denial of one half of the “or” statement logically yields the other half. Indeed, this is something we begin to grasp when we’re quite young. How? Well, think about what happens when the reasoning involves a lie.

Meet Pat, a child no older than five, and no younger than four. (Trigger alert: I’m going to make Pat cry; but don’t worry, Pat is imaginary.)

“Pat,” I say, crouching down to meet the child’s eye level. “I have candy in this hand,” I continue, shaking my left fist for emphasis, “or this one.” Now I shake the other fist.

Pat nods, eyes gleaming with the prospect of candy. “Oh-kway.”

I open my left fist. “It’s not in this hand, so . . .”

Now Pat is practically jumping up and down with excitement. “That one! That one!” the child exclaims, batting at my right fist. Yes! It’s the logically correct inference – the only possible one, given the circumstances. But…

I open my right hand – it’s empty. Pat stares, dumbfounded. Confusion is replaced in turns by incredulity, horror, and finally devastation, as Pat processes this reality. A moment later, Pat collapses into baleful sobs, running into my friend’s outstretched arms. (My friend’s eyes shoot daggers at me. I shrug, as if to say, ‘Sorry, life is hard. So is logic.’)

All at once, Pat turns toward me and, through sobs and snot, cries, “You lied to me!”

Pat’s right, and that’s because the logic demands that candy be in the right hand. The fact that it’s not means one of my other two statements is false, but I cruelly presented it as true. Which one is the lie? “I have candy in one hand or the other.”

No, this isn’t Kasparov after losing to Deep Blue, it’s Pat!

(Credit: Bob Dmyt, via Pixabay)

Yes, I made Pat up, but anyone who has even a passing familiarity with small, jam-handed children recognizes that Pat is typical of an average four-to-five-year old. These little creatures implicitly understand the principle of noncontradiction, which is at the heart of logically correct inferences in formal logic. Dear little Pat may be too young to conceptualize this point but understands that I must have lied. Otherwise, the reasoning is impossible.

The Human in the Machine

What this means is that both non-human and human reasoning machines can draw logically correct inferences. Indeed, no artificial intelligence would be able to do so if it weren’t programmed accordingly. In other words, formal logical systems underpin computing algorithms, including those used in chess programs.

Fidelity Voice Chess Challenger, the first of its kind, 1979.

(Credit: Tiia Monto, via Wikimedi Commons)

What makes playing chess (and similar games, such as Go) so challenging is that you have to scale these bite-sized, tactical pieces of reasoning in order to think strategically. A sophisticated chess player, for example, sets up and solves multiple strategies and sub-strategies with an eye toward the endgame, or the point at which “most of the pieces have been exchanged.”

In his 1847 book, The Mathematical Analysis of Logic, George Boole developed the system of logic consisting of three operators – not, and, and or – in place of standard arithmetical operators.

Those strategies are like blueprints or plans, but the player must be both conversant enough with various strategies and versatile enough to adjust or shift in response to their opponent’s moves without losing sight of the endgame. Indeed, that’s how Kasparov won the 1996 match. Strategies assume goals and nested goals, and then tactics generate the details of reaching them.

The End Game for Intelligent Machines and Chess

The idea of thinking machines – the sort we now see in the various digital chess players populating the programs we have on our phones, tablets, laptops, and desktops – is not new. In fact, it dates back well before Turing first hypothesized what we now call the Turing Test for intelligence.

For example, in 1666, a young German philosopher and mathematician, Gottfried Wilhelm Leibniz, published a paper theorizing that knowledge could be automated. He called it his “great instrument of reason.” An automated reasoning machine could, Leibniz theorized, resolve disagreements. “When there are disputes among persons,” he asserted, “we can simply say, ‘Let us calculate,’ and without further ado, see who is right.”

Could it be that, more than 350 years ago, we imagined a future in which machines would do our thinking for us?

Fast forward to 2023. The World Computer Chess Championship, a tournament between chess programs held intermittently since 1974, was held in Valencia, Spain. Yes, chess programs have their own tournaments. Who’s the smarty pants now?

Ω

Mia Wood is a philosophy professor at Pierce College in Woodland Hills, California, and an adjunct instructor at the University of Rhode Island, Community College of Rhode Island, Salve Regina University, and Providence College. She is also a MagellanTV staff writer interested in the intersection of philosophy and everything else. She lives in Little Compton, Rhode Island.

Title Image source: Pexels